If you’ve ever wondered about the technical details behind your computer’s performance, you might have heard the terms “cores” and “threads” thrown around. But what do these terms mean, and how do they affect your computer’s processing power?

In this article, we will dive into the world of cores vs. threads and explore the differences between the two. Whether you’re a tech enthusiast or just curious about how your computer works, this article will help you understand the fundamental building blocks of modern computing.

So please sit back, grab a cup of coffee, and let’s get started!

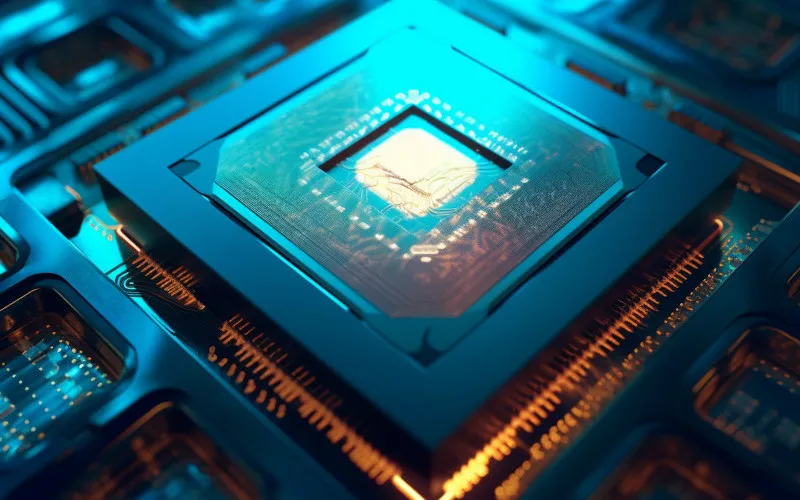

What is a Core?

Alright, let’s talk about cores. At a basic level, a core is a processing unit that performs calculations and executes instructions. Most modern CPUs (central processing units) have multiple cores, which allows them to perform multiple tasks simultaneously.

For example, if you’re running a program that requires a lot of processing power, your CPU might use one core to handle the program’s calculations. In contrast, another core handles background tasks like downloading updates or playing music.

So, having multiple cores can boost your computer’s performance. However, there are some limitations to how much improvement you can expect. For one thing, not all tasks can be divided up and run simultaneously on multiple cores. Some tasks require a specific order of operations or rely on data only available sequentially.

Additionally, adding more cores to a CPU doesn’t necessarily mean that you’ll see a proportional increase in performance. There’s a limit to how much parallelization is possible, and after a certain point, adding more cores can start to decrease performance due to overhead costs.

Though, cores are a key part of modern computing. Without them, we couldn’t run complex programs or handle multiple tasks simultaneously.

What is a Thread?

Okay, let’s move on to threads. Threads are similar to cores in that they allow for parallel processing but operate at a slightly different level. Rather than being physical processing units like cores, threads are virtual entities that share the resources of a single core.

A single core can handle multiple threads simultaneously by quickly switching between them.

So why would you want to use threads instead of cores? One advantage is that threads require less overhead than separate cores – since they’re all running on the same core, they don’t require as much communication between different processing units.

Additionally, threads can be more flexible than cores – because they’re virtual, they can be created and destroyed more quickly than physical cores.

However, there are some limitations to using threads as well. Since they all share the same core, they can interfere with each other’s processing if not carefully managed. Additionally, not all tasks can be parallelized into threads – just like with cores, some tasks require a specific order of operations or rely on sequential data.

Threads can be a valuable tool for certain types of tasks, especially when managing multiple small tasks that don’t require a lot of processing power individually. However, they’re not a magic solution to improving performance – like with cores, there are limits to how much parallelization is possible.

Difference Between Cores and Threads

Now that we’ve covered cores and threads separately let’s compare them and see how they stack up against each other.

In terms of raw performance, cores generally have the edge. Since they’re physical processing units, they have dedicated resources like cache and memory controllers that allow them to perform calculations more quickly. Additionally, having multiple cores means running more resource-intensive tasks at once without slowing down your system.

However, threads have their advantages as well. As mentioned earlier, they require less overhead than separate cores and can be more flexible regarding task management. They’re also generally more power-efficient than cores since they require less energy.

So which one should you use? Well, it depends on what you’re trying to do. You’ll likely benefit from having multiple cores if you’re running programs that require a lot of processing power, like video editing software or 3D modeling tools.

On the other hand, if you’re doing more lightweight tasks like web browsing or word processing, you might not notice much difference between using cores vs. threads.

The best solution is often a combination of both. Many modern CPUs have multiple cores and multithreading support, allowing them to utilize both technologies’ strengths. As always, it’s important to consider your specific use case and weigh the pros and cons of each option before making a decision.

Cores vs Threads Comparison Table

| Aspect | Cores | Threads |

|---|---|---|

| Definition | Independent processing units within a CPU | Separate paths of execution within a single core |

| Function | Allows for parallel processing of tasks | Allows for multitasking within a single core |

| Number per CPU | Typically 4-8, but can go up to 64 in high-end CPUs | Typically 2 per core, but can go up to 4 in some CPUs |

| Speed | Faster processing for each core | Less processing power per thread, but can handle more tasks simultaneously |

| Efficiency | Highly efficient for tasks that require a lot of processing power | More efficient for tasks that require managing multiple small tasks |

| Programming | Typically requires explicit parallel programming to fully utilize all cores | Can be used with multithreaded programming to take advantage of all available threads |

| Cost | Can be more expensive to manufacture CPUs with more cores | Generally less expensive than adding more cores |

| Power Consumption | Can consume more power when all cores are active | Typically more power-efficient than using multiple cores for the same task |

Applications

Now that we’ve covered the basics of cores and threads and compared them let’s talk about some specific applications where they can be useful.

Having multiple cores can be a huge advantage for tasks that require a lot of processing power, like video editing, 3D rendering, or scientific simulations. These types of tasks often involve complex calculations that can be split up and run simultaneously on different cores, leading to a significant speedup in performance.

On the other hand, for more lightweight tasks like web browsing or word processing, threads may be more helpful. While these tasks don’t require a lot of processing power individually, they often involve multiple small tasks that can benefit from being split up and managed in parallel.

Another area where threads can be useful is in server applications. Web servers, for example, often need to handle multiple requests at once from different users – using threads can help manage these requests more efficiently and prevent any user from hogging all of the server’s resources.

The applications where cores and threads are useful to vary widely depending on the task. However, it’s safe to say that, in general, having multiple cores or using threads can help improve resource-intensive tasks’ performance and make it easier to manage multiple tasks simultaneously.

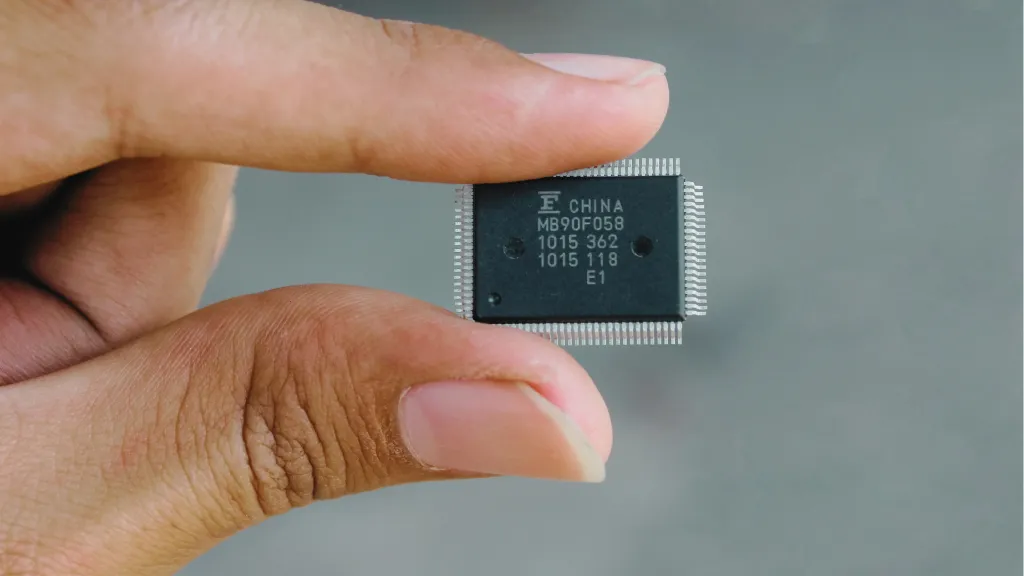

Future of Cores and Threads

So what does the future hold for cores and threads? We’ll likely continue to see improvements in both technologies as CPUs become more powerful and efficient.

One area where we might see significant advancements is in the number of cores on a single CPU. Currently, most consumer CPUs have between four and eight cores, but some high-end CPUs can have as many as 64 cores.

As technology continues to improve, we may see even more cores on a single chip, which could lead to even greater improvements in performance for resource-intensive tasks.

Similarly, we may also see improvements in the efficiency and flexibility of threads. Some new programming models, like the actor model, aim to make writing parallel programs using threads easier by providing higher-level abstractions for managing concurrency.

New hardware architectures like GPUs and FPGAs allow parallelizing computations beyond using multiple cores or threads.

Of course, we must overcome challenges as we continue developing and refining these technologies. One big challenge is figuring out how to efficiently manage and coordinate multiple cores and threads without creating bottlenecks or wasting resources.

Additionally, as we rely more and more on parallel processing, it becomes increasingly important to ensure that our programs are designed in a way that can take full advantage of the available resources.

The future of cores and threads looks promising, with plenty of potential for continued improvements and innovations. As always, it will be important to stay up-to-date on new developments and consider the strengths and limitations of these technologies when choosing hardware or designing programs.

Conclusion

Cores and threads are both important technologies that can significantly impact the performance and efficiency of modern CPUs. By allowing for parallel processing of tasks, these technologies make it possible to perform resource-intensive computations more quickly and efficiently, enabling us to manage multiple tasks simultaneously.

While cores and threads are often compared and contrasted, the reality is that both technologies have their strengths and weaknesses, and the choice of which to use will depend largely on the specific task at hand.

Multiple cores can be a huge advantage for tasks that require a lot of processing power, while for tasks that involve managing multiple small tasks, threads may be more useful.

Looking to the future, it’s likely that we’ll continue to see improvements in both cores and threads as CPUs become more powerful and efficient. Whether through more cores on a single chip or new programming models that make it easier to write parallel programs, there’s plenty of potential for continued innovation in this space.

Understanding the strengths and limitations of cores and threads is important in choosing hardware and designing programs that can take full advantage of modern CPU technology.

With this knowledge in mind, we can continue to push the boundaries of what’s possible with parallel processing and achieve even greater gains in performance and efficiency in the years to come.